I was recently speaking with an underwriter for one of the highest performing London Market syndicates. He told me how they’d recently decided, after several decades of business, to start using databases rather than Excel spreadsheets for their operations. As a technical consultant specialising in insurance data, it was a conversation I’ve had before.

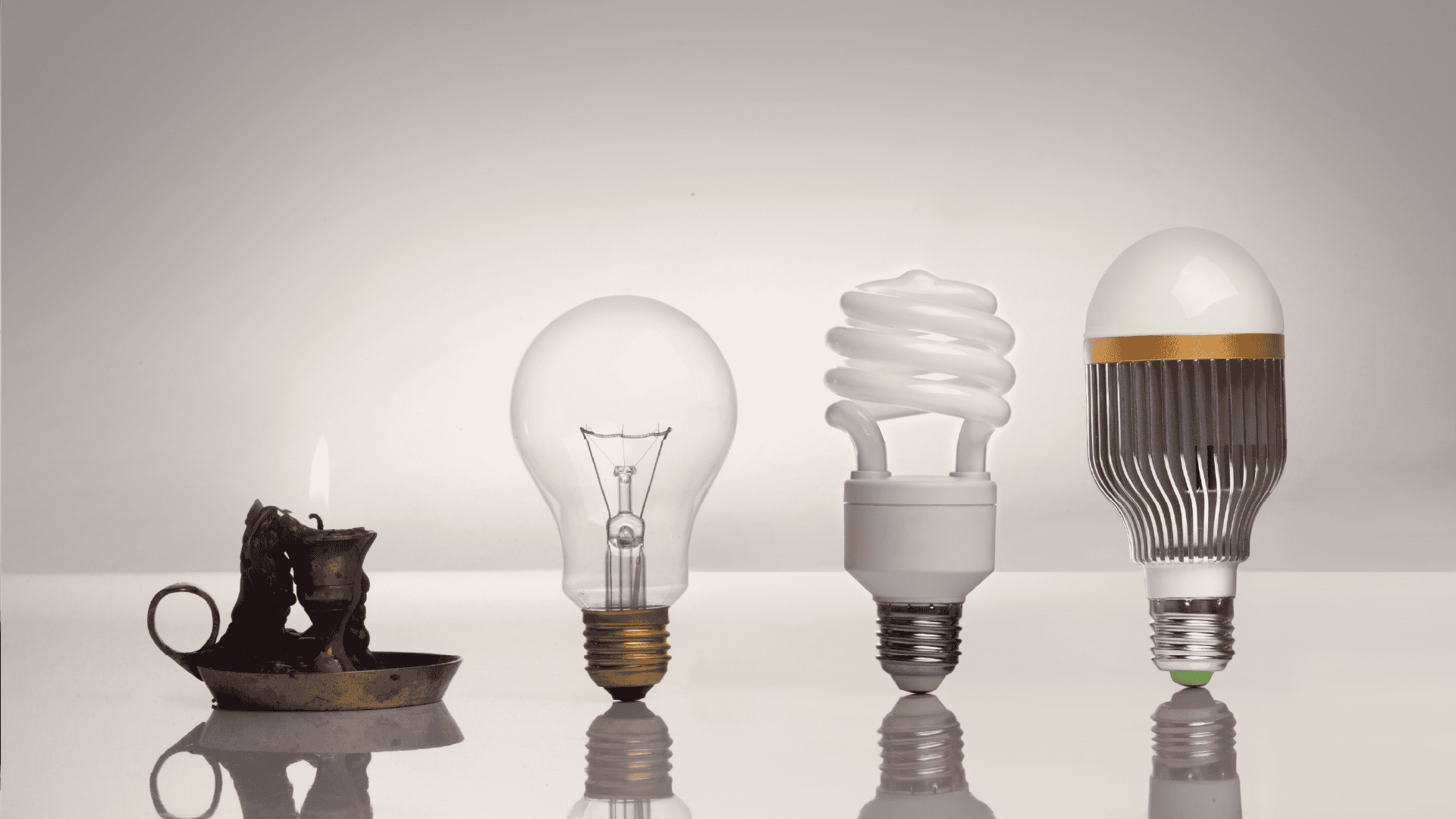

In those situations, it’s my job to champion technical and scalable solutions, but there are many good reasons for him to have kept the late adoption mindset. By staying at the back of the adoption curve his syndicate could be certain that any new tech they adopt was (in his words) “battle tested” and sure to work in an industry where a miscalculated risk could spell the end for them.

Insurance is a cautious industry with good reason, and both regulatory and compliance concerns can add a lot of overhead to trialling new digital products, especially for speciality insurers.

When caution starts to work against you

This instinct towards caution, however, creates a long-term risk. The longer you hold onto your tools and processes, the more deeply they embed into your business, making it harder to modernise when you really need to. Using caution as an excuse to save effort in the short term only makes this problem worse.

As well as the obvious cost of delaying adoption (the time you spend operating with outdated technology) there’s a hidden cost as well. Time spent using new technology is time spent learning about it, and not building those skills in your teams in the name of caution is the greater cost.

We’ve seen this first-hand in insurance. In our work with Zurich UK, building modern data engineering capability early enabled faster business insights and reduced long-term dependency on legacy systems, shifting the focus from delivery speed to organisational understanding.

This is doubly important because writing software and building systems is not the bottleneck it once was. Instead, organisational knowledge and domain understanding are the most important constraint you have, since they dictate whether the systems you build solve the problems you have.

Furthermore, caution doesn’t change the facts that AI and other new technology will provide a competitive advantage to companies who make proper use of it. If a truly disruptive solution arrives, success will be based on when you adopt it, not whether you do.

I believe the key to success over the next few years will be the trade-off between reasonable caution and confident adoption of new generation technology, so we’re now going to explore how to move faster without increasing risk.

Modern platforms unlock new possibilities

Building systems (in the broadest sense) used to take a long time, but thanks to modern tools and platforms that’s no longer the case. Admittedly, that statement has be true for at least the last 20 years, but I do believe we’ve recently crossed a threshold.

In my area of expertise, Data Engineering, “modern platforms” such as Snowflake or Databricks are about ten years old. But while the first era of their existence was developing as data warehousing solutions, more recently they’ve started expanding to other parts of your data estate, and that’s where the value really comes from.

These platforms can centralise concerns such as governance, lineage and access. They integrate tools like dbt and Streamlit into their offerings and provide tools for users over a wide range of technical competency. Many firms will also have multiple data solutions running in different parts of their business, hence the prevalence of meta-platforms such as Microsoft Purview or Collibra, which provide higher level data estate management.

Each of these platforms and meta-platforms have their value and a part to play, but often their capabilities aren’t fully understood, and getting the most out of them requires not only being an expert in the tools, but in the case-by-case problems they are being applied to.

The real bottleneck has changed

The new generation of platforms, if used properly, allow new data products to be built much faster than they could before. Add in the multiplicative speed-up that AI allows, and building a system can now be much faster than defining what that it should do.

The importance of this shift cannot be overstated. To achieve these kinds of improvements, your team need to be experienced in using modern platforms and your organisation needs to have a robust and well-documented set up to allow them to succeed. But while they need to be competent with the platforms and tools, they need to be experts in the domain they are building for, because the bottleneck is now alignment between the system as built and the end users’ needs.

If a system is built quickly without deep understanding of the domain, it risks either not serving the users’ needs or serving them in an inaccessible way. The last case is particularly common, where an IT team will insist the product works, but the users disagree. Either, however, will likely lead to the users’ creating a shadow tech layer to work around the perceived failings of the system, which will only get worse if future iterations don’t stop and take the time to learn not only about what the users need but how they work and how they think.

Through this lens, it becomes clear that for most use cases, competitive advantage is not going to come from technical novelty and ingenious, never-before-seen solutions. It’s going to come from collaboration and understanding between your IT and the rest of your business, and a digital architecture which promotes learnings speed and adaptability.

Building for now without locking yourself in

Now more than ever, the future is difficult to predict. We can be confident that there will be disruption from AI and continued innovation from data platform providers, but we can only speculate on the exact impact that it will have on the insurance industry.

This makes it very difficult to plan for what your digital architecture should look like in two years’ time, let alone further along.

This means you shouldn’t be afraid to build for the short term. Focusing on what you need now reduces your exposure to the risk of further technological change by avoiding commitment to ideas which may not prove useful.

When your needs change, you can then choose whether to extend or rebuild your existing systems. You can make this decision safely because the retained domain knowledge allows your IT teams to tackle either problem. In both cases, the experience, context and technological improvements that will have happened in the meantime allow you to avoid the guesswork of trying to build for an unknown future.

That’s not to say you shouldn’t think long-term, but that your long-term strategy can arise from your short-term needs. By solving the problems you have now, you’ll embed the domain knowledge in your teams such that when you know what you need later, you’ll be best placed to build it in the blink of an eye.

Domain-driven design: building systems around how the business thinks

The idea of technical architecture mirroring domain concepts is not new. Domain-Driven Design (DDD) is a software architecture philosophy and set of practices based around the idea that technical architecture should align with domain concepts and include a “Ubiquitous Language” – a shared understanding of the concepts involved between the users and the developers.

By leaning into DDD, you promote closer collaboration between your technical architects and your domain experts by design. This naturally creates an environment where organisational learning is championed and preserved, with organisational and domain knowledge being the key long-term asset of the digital system.

A new definition of prudence

Caution is important, but as with all parts of insurance it comes down to risk. If you lose your ability to react to new technologies, you will risk your competitive position.

Whilst the principles of organisational change may remain the same over time, AI promises to radically upend the practices.

You can prepare for this though. Shorten the lifecycle of your digital architecture and promote change management to a first-class capability to ensure you can adopt disruptive products as quickly as possible with minimum friction.

In the new world of accelerating tech, prudence does not mean delaying adoption. Instead, it’s about limiting exposure to the risks of new technology whilst still adopting it and learning about it in whichever ways you can.

Competing on adaptability

New generation tools (both AI and modern platforms) can allow your IT platforms to develop and change much more quickly, but the teams building them have to be both digital and domain experts to reap those benefits.

This makes expertise and organisational knowledge the new key asset, so prioritising learning through new initiatives is vital. This knowledge cannot be gained second-hand, so the better definition of caution now includes continuous and prudent adoption of new technology to promote adaptability.

This adaptability is what will set you apart as we navigate the current uncertainty around what the “best” tech looks like. Playing it safe might have worked in the past, but going forward will leave you wishing your organisation understood how to truly leverage the latest solutions.

Need a second opinion?

If you’re exploring modernisation in insurance and want a sounding board, I’m always happy to talk through options and trade-offs.

And if you’re interested in how we design secure, resilient platforms that support modernisation at scale, check out our Future Systems Engineering practice.

Change the modernisation conversation

Our customer lookbook helps you:

- – Drive better conversations with CFOs, CROs, and CEOs

- – Share common challenges

- – Draw inspiration from real success stories in financial services and insurance

Whether you’re just beginning or need to re-energise a stalled programme, our lookbook helps you drive more compelling conversations to align stakeholders around the outcomes that matter most.