I once watched a video which demonstrated a series of progressively quicker ways to sum the numbers one to *a lot*. Through a series of clever multithreading techniques and technical optimisations, the video author was able to achieve impressive improvements over their initial Python implementation. But here’s the twist: at the end, they revealed that Gauss’s famous formula for summation of an arithmetic series was many orders of magnitude quicker, completely obliterating all those hard-won gains.

At the time, I thought this was quite a frivolous point to make, but it clearly stuck with me, because I was reminded of it months later while trying to speed up some stubbornly slow data transformations.

Anatomy of a slow data transformation

I was working on a project for a client in the energy industry and had written some PySpark to transform a few thousand rows of industry-specific data. My first implementation chugged along for 30 minutes before I stopped it – this was far too slow for our needs, especially given the relatively small amount of data.

I profiled what was taking so long and it appeared that the culprit was some complex date operations that needed to be performed on multiple fields in each row.

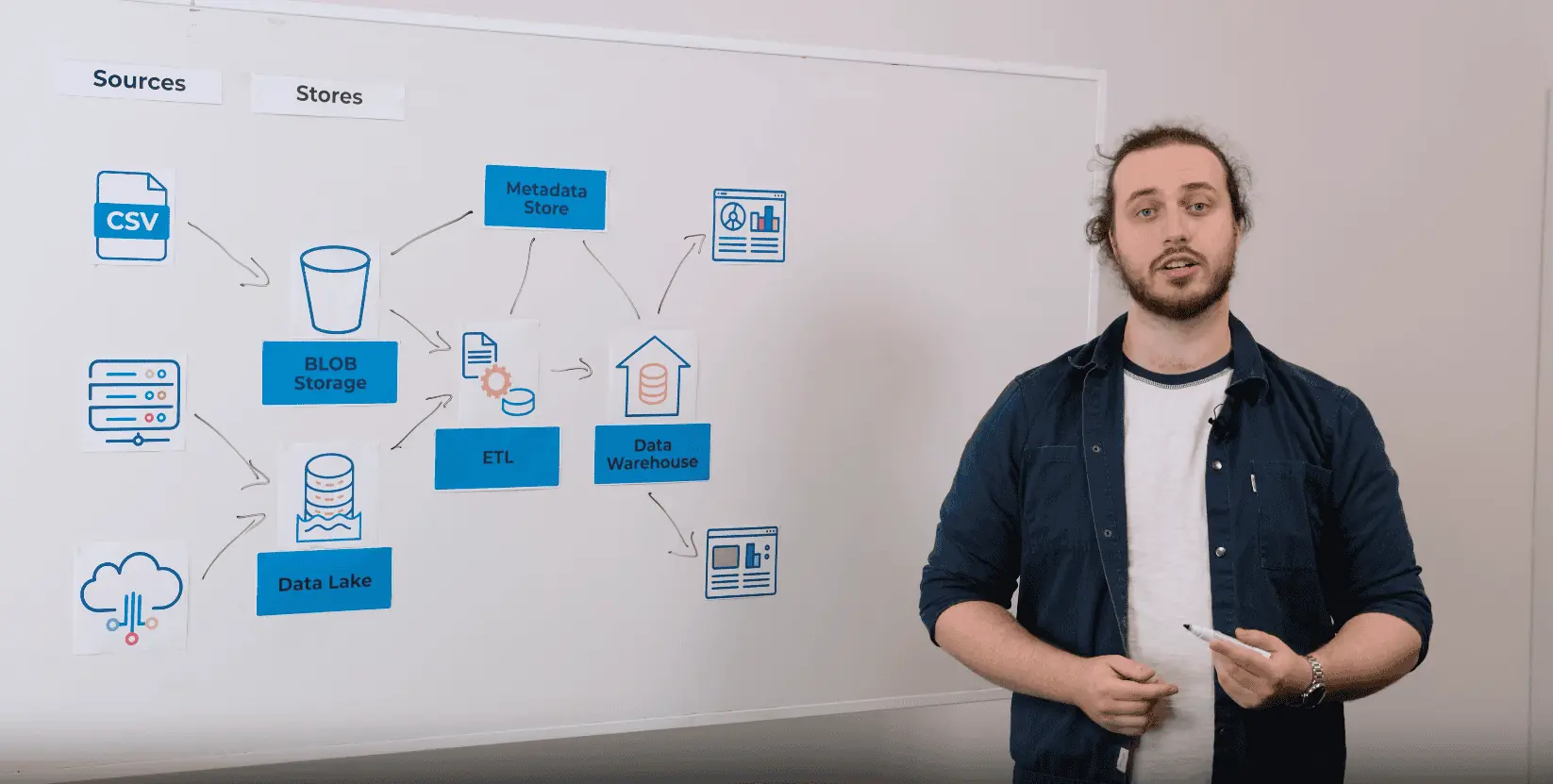

Unfortunately, the operations were too complex to take advantage of Spark’s inherent parallelisation, so my initial instinct was to look for some technical solution to make the date operations quicker. I batched my database calls and ensured that our date operations were being carried out on NumPy’s date representation, which is quicker to operate on than Python’s native dates.

This effort did result in quicker operations, but the whole transformation still chugged along for 30 minutes before I called a halt.

It was becoming clear that incremental improvements in the speed of date operations were not going to be enough. Based on the profiling I had done, we needed this transformation to be at least a couple of orders of magnitude quicker.

We can do more; but should do less

When trying to improve performance, developers most commonly look for three types of solutions:

Option 1: throw more compute at it

Nowadays, with all the various pay-as-you-go compute services out there, it can appear that the only barrier to using more compute is financial, not technical. But that financial barrier is a significant one. Lying behind it are the ever-rising environmental costs of cloud computing, which we should all be working to mitigate.

It’s easy to use more compute: either rent a bigger machine or make greater use of parallelisation, but this is often wasteful. It’s our job to deliver performant solutions. There’s no reason to use a big machine, or lots of little machines in a trench coat, when a small one will do.

Option 2: use more efficient tools and techniques

Here I’m referring to methods that usually deliver generalised improvements and can be applied to similar technical problems in any domain: using a more efficient library, for example, or batching network calls.

Don’t get me wrong, incremental technical improvements like this are both useful and fun: everyone wants to try out new tools and to write more efficient code. Better code and tooling will deliver genuine improvements but sometimes we must recognise when things like this just won’t meet the scale of the problem.

Sometimes going faster isn’t enough.

Sometimes you just have to stop and think.

Option 3: approach the problem with fresh eyes

My colleague, Ben Below, gave a talk at StaffPlus Conference 2024, which I really recommend watching. While trying to optimise the memory consumption of a genome-matching algorithm, he realised that the worst-case memory requirements of their approach was orders of magnitude greater than the size of all the data in the world. Since they couldn’t download enough RAM to store every 0 and 1 that humanity has ever produced, Ben and his team at Softwire instead applied their knowledge of how genome matching worked and approached the problem in a completely different way, which allowed them to significantly save on the number of calculations they had to do.

In my case, I was clearly asking the computer to do too much. If I were going to achieve the orders of magnitude of performance improvements I needed, tinkering around the edges wouldn’t be sufficient. I would need to find a way to do less work.

So, it was time to stop and think.

Know your data; know your domain

If a series of operations on dates is taking too long, then it makes sense to look at the dates in the dataset I’m profiling. When I did this, I noticed that there seemed to be a pattern of repetition, and that in the thousands of rows of data I had, there were fewer than a hundred unique dates. The answer was obvious: just cache the result of each date operation and use a cached value if we have seen that date before.

That’s all well and good, but how would I know that it won’t fall over the moment it’s exposed to a different dataset? How could I be sure that there won’t be thousands of unique dates in some other dataset and that we’ll be right back at square one?

There’s no way to answer this by looking at tables of contextless numbers and dates; the only way we can answer these kinds of questions is if we actually understand what our data means: where it comes from, what its purpose is, what business processes it relates to, and more. And we can only appreciate those things if we have a firm grip on our domain.

For my problem above, I was able to deduce that these repeating dates would always repeat because I knew that they were produced by multiple parties reporting the same type of data on the same schedule as each other. I only knew that because I had spent time talking to our client, and to end users, about the business processes that generated this data, including what they were for and what they hoped to achieve.

As Data Engineers, taking the time to get to know the domain we are working in will be well worth the investment. Not only will it ensure we deliver the outcomes our business actually needs, but our technical results will benefit too.

What would Gauss do?

So why was I reminded of that video about summing numbers when I encountered this problem? Well, it’s because I think Data Engineers can learn a couple things from it.

Firstly, that no matter how good you get at mental arithmetic, summing the numbers from 1 to 1000 would take quite a long time without Gauss’s formula. Sometimes we will be presented with problems where doing the same thing faster will never be enough. Instead, we will have think about the non-obvious things and look for patterns we can exploit.

Secondly, Gauss really knew his number theory. Gauss’s Summation Formula came from his understanding of the relations between numbers in an arithmetic series; we must explore the relationships in our data in a similar way.

So, when we are presented with a stubbornly slow data pipeline our first instinct should be to look for patterns in that data and think about how we can exploit them. To do that we must understand our data, and for that there is simply no substitute for expertise in your business domain.