In our latest Digital Lighthouse episode, Zoe Cunningham is joined by Daniel Whitston, CTO of AutogenAI.

The adoption of AI tools is on the rise, with a large number of them utilising the large-scale language model, GPT-3. While these models can solve difficult problems, they tend to be trained on a large corpus of the entire internet and may not capture an individual company’s voice or values. Daniel discusses how CTOs can embrace and use AI large language models in their company, and shares how AutogenAI is bringing AI-generated outputs closer to company needs.

Learn more by listening to the podcast or reading the full transcript below.

Digital Lighthouse is our industry expert mini-series on Softwire Techtalks; bringing you industry insights, opinions and news impacting the tech industry, from the people working within it. Follow us to never miss an episode on SoundCloud now: See all Digital Lighthouse episodes on SoundCloud

***

Transcript

Zoe Cunningham: Hello and welcome to the Digital Lighthouse. I’m Zoe Cunningham.

On the Digital Lighthouse, we get inspiration from tech leaders to help us to shine a light through turbulent times. We believe that if you have a lighthouse, you can harness the power of the storm.

Today I am super excited to welcome Dan Whitston, who is the CTO at AutogenAI. Hi Dan, and welcome to Digital Lighthouse.

Daniel Whitston: Hello, Zoe. It’s a pleasure to be here.

Zoe: Can I ask you to start by telling us a bit about how you got to your current role and what your role entails?

Dan: Absolutely. I’ve been working in tech for quite some time and I’ve handled the tech function of businesses before that have operated in the software-as-a-service space, normally B2B. Before that though, before I came into tech, before I was a developer, before I moved into management and then took on the CTO role, I worked in bid writing for about a decade or so. I helped to put together tenders or bids for large-scale government contracts. The main thing that’s brought me to AutogenAI today is that I have this combination of understanding of the role field of tender writing and also of tech.

Zoe: Amazing. Now you are in a role where, essentially, you’ve got the main oversight for all of the technology within AutogenAI.

Dan: That’s correct. I’m the CTO. That means that I’m not necessarily a specialist in any one part of the technology stack, but I have an overview across all of it to understand how it can contribute to the business and can also speak on behalf of technology to the other people in the business, to make sure that the interface between all the parts works as well as it can do.

Zoe: Amazing. Obviously, I’m biased, but such an important role. This has got to be the key to our whole chat. What is AutogenAI?

Dan: There’s a lot of stuff about AI at the moment, in business, in the press, among people I know. It’s part of technology that has really taken off in the past few years, and that’s been driven by some huge developments in large-scale models. The ones we normally look at large language models, but there are other kinds as well that generate images or video, that solve often very difficult to solve problems as well. The one that hasn’t made as many waves yet, but is at least as important as the image or video AI generation stuff is language generation. That’s the main one that AutogenAI is looking at at the moment.

Now, for a couple of years or so, since GPT-3 came on the scene, people have been gradually picking up on it and have been using language AI to generate texts, to do their work, whatever that work is. Professionally is up to five times the speed, genuinely makes people a lot faster at creating professionally written content to meet whatever their need is, whether that’s fiction, non-fictional, or technical work, blog posts, the lot. Now, all of that so far, has been on an individual level.

The people who have picked this up and run with it are the ones who are benefiting, but when it comes to large organizations, corporations, big companies, they don’t really have a great way into that at the moment. What AutogenAI does is it provides a way for them to engage with language models and text generation to help them do the kinds of work that they need to do more efficiently and to take advantage of this opportunity that’s arisen in a more cohesive and combined way. The other big thing we can do, of course, is those large language models, as they’re called, that are used individually, tend not to be trained up for the individuals who are using them.

They’re trained up on a large corpus on the entire internet, and that makes them very good generally, but they won’t necessarily capture an individual company’s voice or values in the same way that’s possible if you take all of the text, the corpus of a company and feed us into more of a bespoke model. It’s partly about helping companies to engage with this, and it’s also about helping the models themselves to engage with the companies to bring them close to company needs.

Zoe: You are forming a bridge because of course, if you are a CTO, of, say, a large government outsourcer, it is your job to be on top of technological development to know what’s going on. It’s not possible to be an expert and it’s not possible to work across everything that’s going on. This is where using a company like AutogenAI allows you to have access to that specialist knowledge about large language models in this case, and find out how to apply that within your business. If you’ve got some examples of what it would be used for and why it’s important, really. Why is it important for CTOs to know about these technologies and use them?

Dan: I’m already using this technology daily within my role. When there’s anything I need to write, the first thing I do is I pick up our tool, our internal model. The first version is called Genny-1. That already is helping me in my role. What I will say is that it is one of those things that, it’s not mine precisely, but it is a thing where people often need a bit of help to get over the initial hurdle.

If your job all day, every day is writing, and you are very used to your existing tools and technologies and routines, then adapting to this new technology learning has to take advantage of it properly. It does need a bit of engagement, a bit of push to help you through. That’s why so many other approaches to this focus on attracting individuals who are willing to put in that work to make the leap. Whereas, with us, we’ve chosen maybe the slightly harder option of going to companies as collections of people and trying to help them collectively to make this jump.

Zoe: Great. Actually, bringing your expertise to maybe help organizations deliver on their objectives by bridging, it’s all back to bridging gaps again. What I really want to know is we’ve talked a lot about how you are using large language models, which is a new form of tech, but what exactly is the large language model?

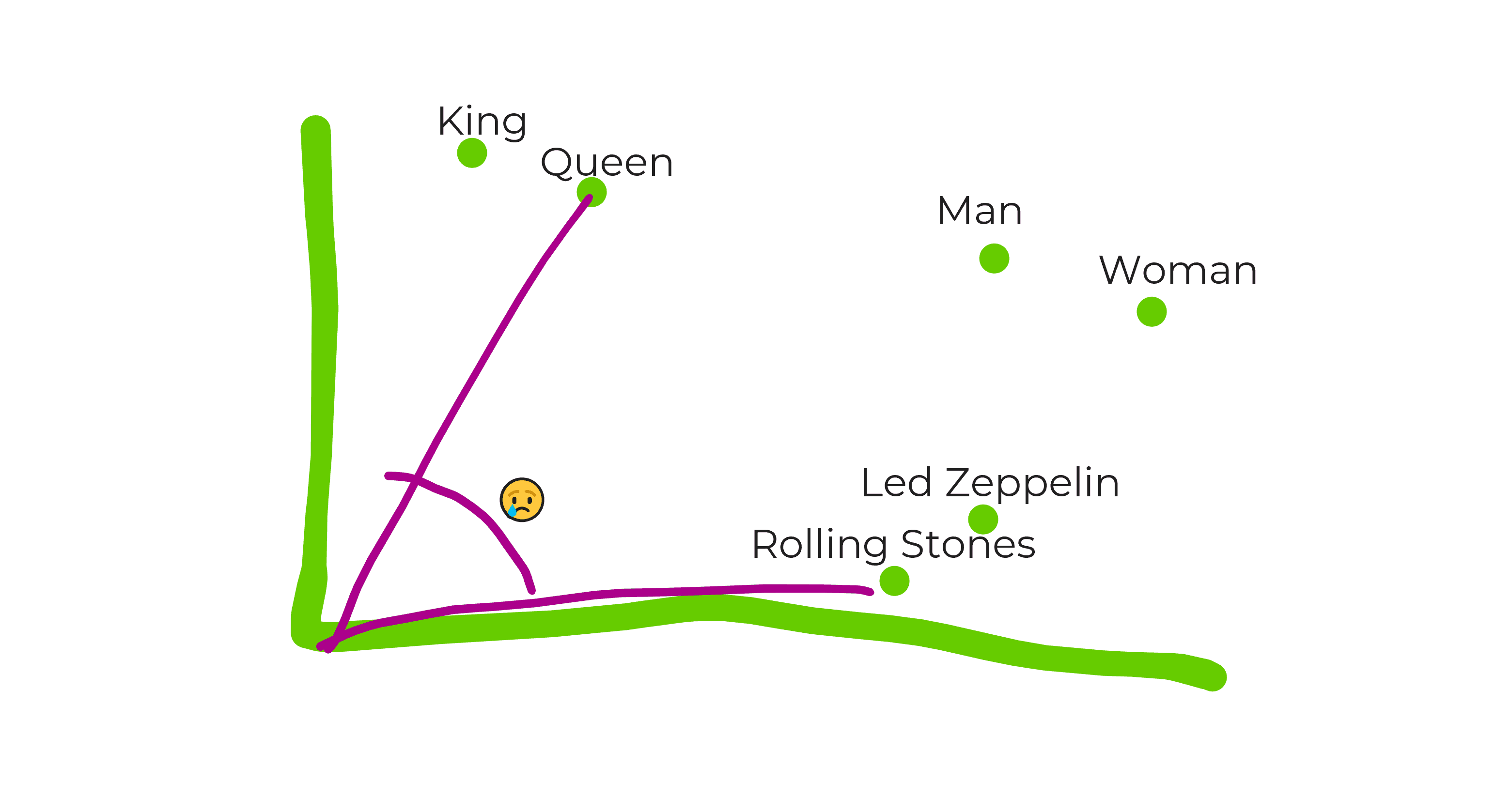

Dan: Many people have tried to explain this. This is my version of it. You are very welcome to read up on it elsewhere. The obvious large organizations in the AI space, people like OpenAI or Google, Facebook, Microsoft, and so on, take a vast amount of text contents that they have sourced mainly from the internet, something called the common crawl. Also stuff like Wikipedia, any other corpses they can get their hands on and they feed it to a neural network of sorts, a big brain, if we want to call it that. What the network does, what the big brain does is it learns how to talk like the content it is given.

If you feed it some input once it’s been trained, then it will take that input and it will try to figure out what words based on all of the stuff it’s learned are most likely to come next. Which sounds trivial. It doesn’t sound like a big thing, but that means you can give it a prompt and it will be able to without copying directly from anything that it knows, because it’s been trained on everything, it is able to produce readable, well-structured texts that answers the prompts that you gave it and it is able to do that at scale and at speed that are absolutely impossible for a human being to do.

Zoe: It’s a bit like when you, there’s a lot of these little games that go around on Facebook saying, they give you the start of the sentence and then they say, “Now, click the middle auto-complete on your phone until you get a sentence.” Obviously, comes up with something ridiculous. It’s that idea of predicting the next word. Obviously, because it’s got so much content that is woven together in this complex way, it’s producing much better results. It’s actually producing something that is much more like the text that’s already been fed, rather than just something that sounds a bit silly.

Dan: I think that’s about it. We’ve had for a very long time the auto-complete thing where you just take probability of the next word. The highest one is the one that you put in. This is a few steps beyond that. It’s more the case that it’s able to look at the totality of what was written before and to figure out which words are the actually important ones. Then to generate text with slightly wider view. As you’re aware, the ones that go on essentially a walk that only remember the current thing and don’t have any state longer than that they do tend to descend into nonsense fairly quickly. Much like ELIZA did it back in the day for example.

Zoe: Exactly. Or in my case, like, see you next week, which is obviously what I’m always typing into my phone and not suitable. What is it that AutogenAI do to make it easier for people to interact with the large language model? What is it that you’ve added just being something where you type in some text and it’s a bit of fun? What kind of interface enhancements do you have?

Dan: We have something of a job of strange as it sounds, translation between the way that you need to talk to the AI in order to get what you want and the way that actual users expect to be able to interact with a tool in the first place, and the kind of tools they need to get their jobs done. There is, in fact, a whole discipline that’s emerged around this called prompt engineering, which didn’t exist as a term even two years ago, I think, but we now have an entire prompt engineering team that spends all day, every day figuring out how to, as you said earlier, bridge that gap.

How to understand what prompts, what instructions to give to the AI in order to generate content that meets the needs of the users, which is obviously the people writing stuff in the companies that we’re serving.

Zoe: Say I wanted to interact with it I guess maybe to write a blog post or something that I might be doing, I suppose I would want to be like… Let’s say, I’m writing a blog post about artificial intelligence, so I suppose my instinct would be to literally write down exactly that, “Write me a blog post about artificial intelligence.” How would that experience be different with using AutogenAI?

Dan: You could put literally that into playground, if you want to call it that, into a direct interface to a large language model. It would make a creditable attempt at starting it, but you wouldn’t usually just put that in and get an entire blog post out. You wouldn’t necessarily get the structure that you’re looking for immediately. You would have to interact with it many times over the course of the post to instruct it on how to tweak individual bits.

Some of that is learned through experience of direct interaction, so that is the thing that will improve with time. Some of it you just have to keep trying and retrying to get the result that you want. There’s room in all of that for an intervening interface and a piece of prompt engineering work to smooth the process and automate a lot of those steps. We can, for example, issue 10 different sets of prompts to a language model, and then we can show the results very quickly.

We can filter out the ones that we know not to be useful for the purposes that we’re aiming at. The ones that are left, you can pick one from. You can then click a button to expand it. You can draw, and this is kind of our most obvious thing. You can draw a bunch of ideas about ways to explain AI or different things that AI might be useful for. Then you could convert each of those ideas into a paragraph at the touch of a button.

It’s kind of the difference between, say, a text engine such as I don’t say, LaTeX, but let’s say Vim for more technical people out there, and something like Microsoft Word, for example, or some other higher level process. I think that distinction between high and low level is probably key to understanding this. High-level stuff as you know, it allows you to be more productive if used correctly, especially if it’s something that was put together for your purposes, it will have a lot of stuff in there that you don’t need to spend 10 times as long getting to.

Zoe: I think I’ve got it. With Word, for example, or if you had a more basic software than Word, then maybe when you wanted to write the title, you’d have to work out how big that was and what kind of font you wanted it in. Whereas in Word, because it’s set up for people writing business documents, there’s all these preset things such as clicking to say this is a title, this is a subheading, and then I can see that’s much more productive than if you have to go and do that individually.

I suppose the other thing about what you’re saying is it’s a bit like going through step by step. I guess, so for me, it made me think of if I was managing a junior member of staff then if I say, “Go and write a blog post.” A new junior member of staff, I almost don’t know what I’m going to get back. Whereas if I say, “Write the first paragraph this way, write the second paragraph this way.” It’s that kind of idea, right?

Dan: Pretty much, yes. It’s helping hands to productive more quickly. Part of it is providing essentially a short interface that you can interact with directly instead of trying out lots of different commands. That’s all on the UI side and that’s important and it’s what I spend a lot of my day helping to put together.

Obviously, it’s worth pointing out that the actual models we draw from are based around company’s individual needs as well. We’re tailoring the interface to the company’s needs in terms of the actions that are provided and also the model itself. We are essentially growing on top of the existing corpus by adding the company’s own corpus serve tax to it to help generated text be in the voice of the company and over time to start using references to actual company knowledge as well.

Zoe: Right. This is something that’s come up on the podcast before that users are so used now to really easily usable software, like having the minimum clicks to get something out. What lots of non-technical users don’t realize is that to get that ease of interaction takes a lot of work behind the scenes, and it’s almost the easier your software is to use, the harder it was to build. That principle’s really in practice with AutogenAI.

Dan: Absolutely yes. It’s I don’t want to say our main focus but it’s one of our main focuses. It’s the stickiness, I don’t want to call it addictiveness but you do not want to lose this. Once you’ve become fully attuned to how you collaborate with an AI and creating content, that is a lot easier to get to if your software is easy to use. I think if we are rolling this out at a company level, that is actually more important because we have to aim to get everyone on board with this over time.

Whereas if you are aiming these individuals, whoever’s willing to make the leap out there, then to some extent, that’s a thing you are less worried about because, really, it’s more about getting as many people into the funnel as possible. That’s not really our aim. Our aim is the people that we do have, we really want to help them to become productive with this and to make it part of their daily writing.

Zoe: Amazing. You mentioned prompts engineers there, which is a new role. I suppose essentially it’s kind of coding but coding into the AI, which as you say, you obviously don’t have a background in because it didn’t exist until very recently. Then I’m guessing you also have machine learning experts and also web developers. You’ve got a lot of different specialisms within your team. What does that leave your role as a CTO being?

Dan: Making sense of it. It’s very easy for the individual specialisms to plow their furrow and to get very invested in the particular challenges they’re facing. Those are all important challenges but relating them to each other and to what we need to deliver as a coherent vision in order to attract and meet the needs of our end users, that is a wider task, and helping everyone to understand how they’re contributing to that and how best they can align themselves so that we’re all pointing in roughly the same direction. If I go back to the plowing the furrow imagery from earlier, that is probably useful when you’re plowing a field, otherwise you’ll bump into each other and end up with the right mess.

Zoe: Right, exactly. What are the key skill sets you need to be able to do that well? Because that sounds to me a bit like playing CTO on hard mode. It’s not just you are the CTO of a team who are all doing jobs you’ve done before and you’ve grown up through the ranks. What would you say are the key skills that people would want to work on or learn?

Dan: I think any developer role, there’s a certain amount of comfort with not knowing everything, with ambiguity, with being able to jump in and find out the detail where necessary. That’s necessary because development of any kind is just so complicated nowadays and there is so much that you don’t know. I think that’s part of being effective as a developer, generally.

In this role, I think a certain amount of willingness to venture into the unknown more so than in previous tasks I’ve taken on, previous challenges I’ve faced. This is moving into a new way of doing things where the rules are not yet written. How do you collaborate with an AI writer? We know how to collaborate with other people on Google Docs when we’re all editing a shared document. Doing that with an AI, it’s mind-bending. In a good way, it’s mind-bending. Also, just speaking as a writer here rather than as a techie, that is a huge opportunity.

It’s also a huge challenge to get to grips with as well. I think a certain openness to different approaches to understanding more how different types of role and not necessarily all about being purely technically aware, can still contribute. That I think is important because it’s an attempt to help people with the act of creation. Data science and technology and software development, UX, UI, all of those things feed into it, but some of it as well is about understanding the act of writing and helping people to consider how there’re items to change some of their patterns to work better with a slightly unusual collaborator.

Zoe: Amazing. It sounds incredible. It strikes me, I mean, I do tend on average to be a bit of an AI naysayer. People are saying, “Oh, it’s going to take over the world. We’re going to have sentient robots.” I’m like, “No, no. We’re not. We’re not, we’re not.” However, this whole area is just progressing so quickly right now. Why do you think that is?

Dan: There’s a thing in most technologies where you get an S-shaped curve where nothing happens for ages and then some major discoveries, inventions happen that open up an entirely new field. There’s a huge amount of progress very quickly in a relatively short amount of time, and then gradually, as the new space gets fully explored, it starts to tail off and settle down. People find ways to be effective in that space.

I think with large language models and other large AI models of a similar type, we have just entered the somewhat more vertical bit of that s-shaped curve. AI has had a number of false dawn tests. It’s something that people have been studying for a very, very long time. If you think about, say, the Turing test, for example. It’s easy to be somewhat cynical about it, I guess. At the same time, what is happening is that AI once it becomes part of daily life, generally, isn’t called AI any more.

Zoe: [laughs]

Dan: It gets called a whole bunch of different things. It’s already in our lives and has changed our lives quite a lot, but we just don’t think of it as AI. This particular set of technologies, I don’t know, I think it might be just old and outside the norm enough that it finally sticks and people start thinking that, “Oh yes, this is AI.” It still has a fair way to go. There are still discoveries being made almost every week, and I think there’s a good chance we are not anywhere near the top of this curve.

Zoe: That’s such a good point because, actually, in fact, my whole question is premised on, “What do humans find surprising?” What I’m saying is humans are currently finding this quite surprising that computers can do this, whereas adding up two and two on a computer, it’s still a form of artificial intelligence, right? We’re just not surprised or amazed by it anymore.

Dan: Absolutely. Something like the Turing test that’s gone by the wayside. If you were looking at that there are computers now that could happily pass that. We’ve had to develop finer, more and more refined tests to tell the difference between people and AI. Past a certain point, you have to wonder whether it’s got some level of diminishing returns going on because they’re really quite complex now and you are having to caveat the human being in those tests and say there are people that know this particular area and are capable of writing eloquently about it.

They are given a long time to answer each question because obviously if you’re up against an AI, an AI can answer in a couple of seconds. It can write entire essays in less than a minute. You see that it is a goal that keeps moving as we get closer to it. I think that it’s a good thing that the goal’s moving, but it makes it difficult to talk about it in a, “Oh, have we reached the AI dawn sort of way?”

Zoe: I see. I see. Well, we’re at some first raise of AI lights, maybe. [laughs]. Thank you so much, Dan. This whole area is so fascinating and it does really feel like we’re at the start of something that is going to get bigger and bigger in terms of what these technologies can do. Thank you so much for coming on the show to help us to shine a light for others.

Dan: It’s been a huge pleasure. Who knows? Maybe I’ll see you again in the new AI dawn.

Zoe: Yes. [laughs]. See you there. [laughs]