One of the biggest challenges with Large Language Models (LLMs) is hallucinations. These occur when AI tools confidently produce plausible-sounding answers that are factually incorrect.

Why does this happen? And what can you do to reduce or prevent hallucinations?

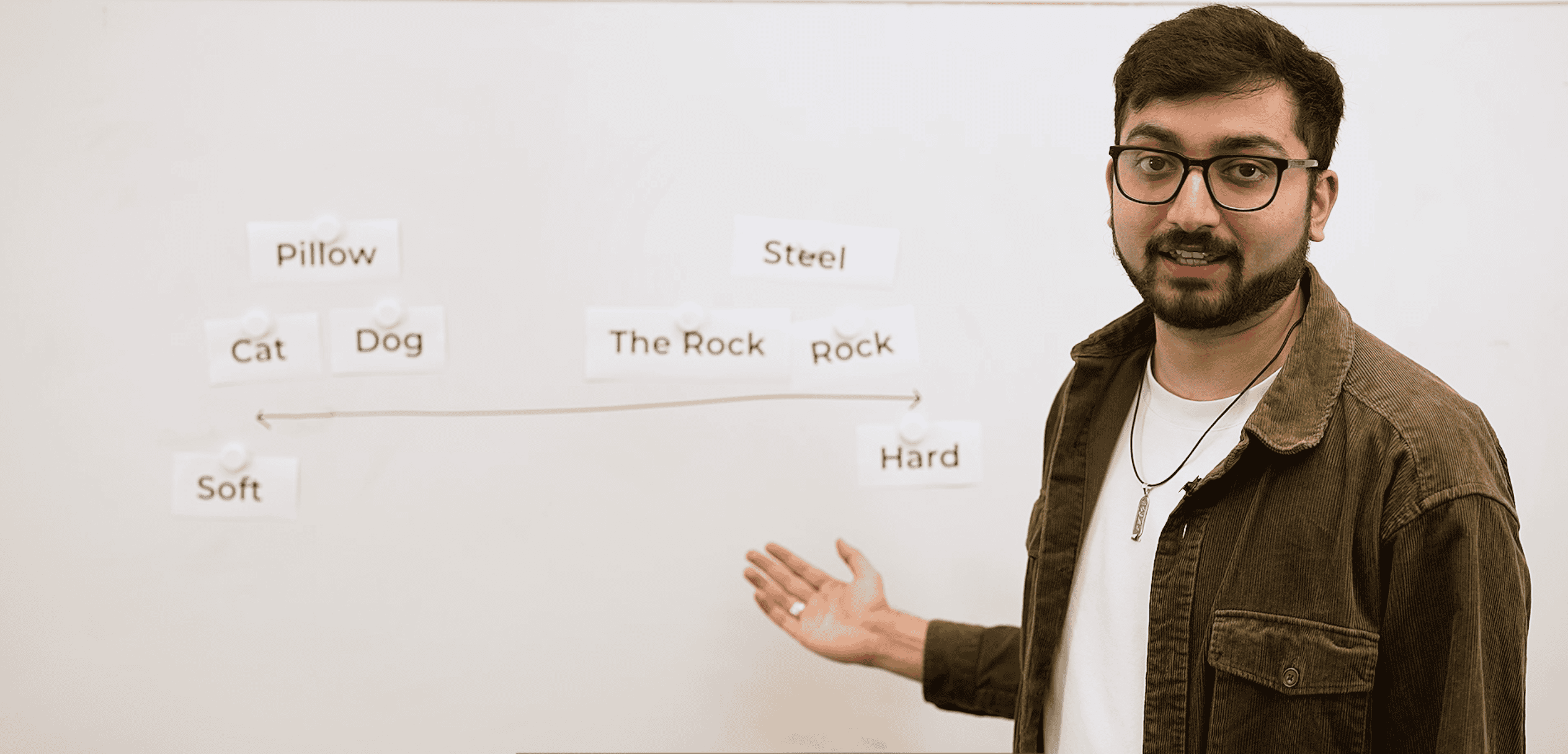

In this episode of our Data and AI Engineering in Five Minutes series, Shivam Chandarana (Technical Lead, Softwire) explains:

- Why hallucinations happen

- How Retrieval-Augmented Generation (RAG) improves LLM workflows

- How RAG enables more personalised and reliable outputs