I’m drowning in a data lake(house)

Data! It’s everywhere. If you work for any kind of business or institution, chances are you’re collecting some data – be that sales transactions, customer records or user preferences. While the data you collect and serve will primarily be used to power your application, you might also want to use it to gain valuable insights. What are your best-selling items? What do your customers want?

Before you can make the most out of your business data, you need to decide on a storage option. But which one to choose? In the world of technology, there are often a lot of buzz words and sales talk for proprietary software. You might have heard of platforms like Snowflake and BigQuery; or concepts like data lakes and data warehouses – but what do these terms actually mean?

I find that the first step to understanding concepts properly is to demystify terminology. Once you can sift through the jargon, it’s much easier to focus on the actual use cases. The goal of this article is to give you a simple, intuitive understanding of various data storage architecture terms that are being thrown around a lot. After that, you’ll be able to make your own decisions about whether some of these concepts might be useful to explore further.

A very brief overview of data engineering

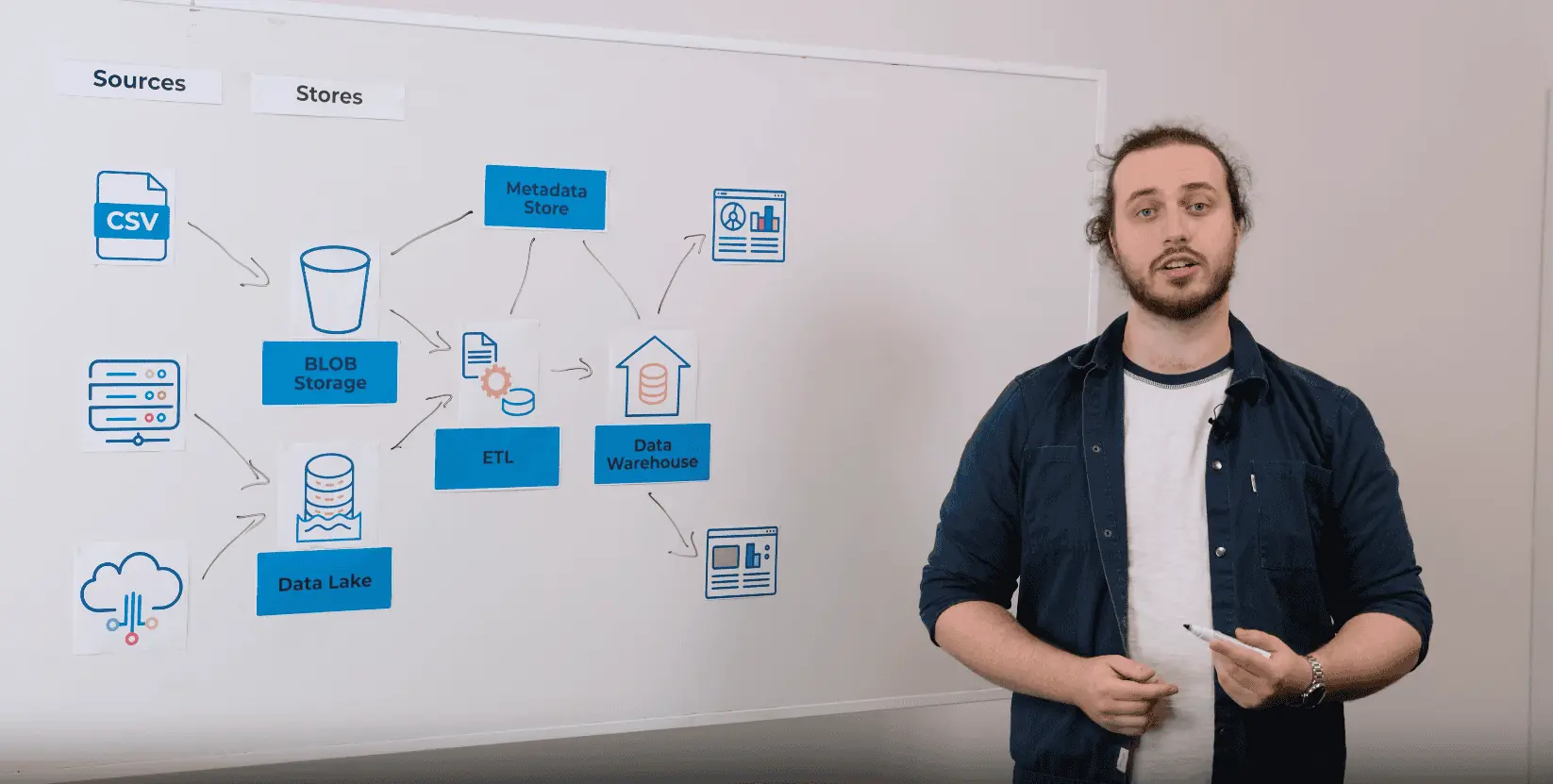

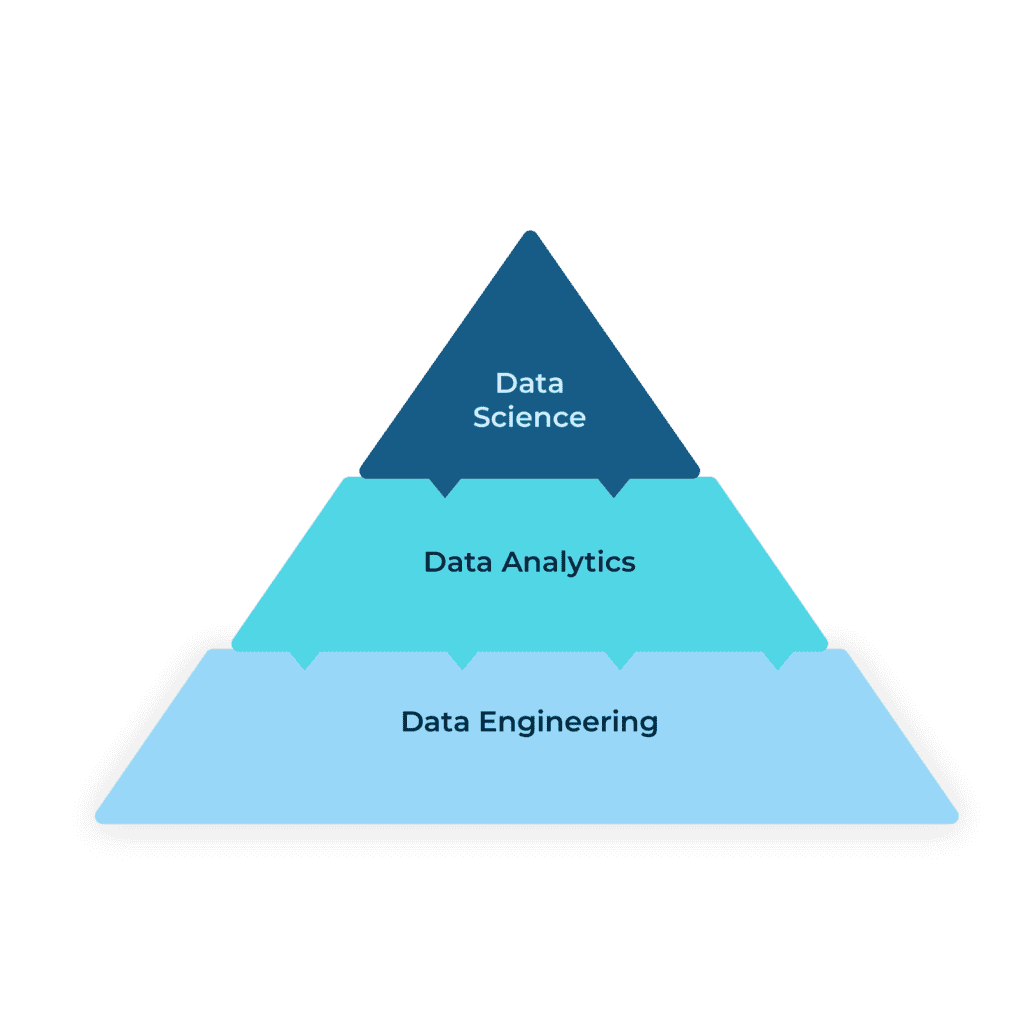

Before delving right into the different concepts, it is useful to understand data engineering as a discipline, and what it enables. Data engineers build the foundational “platform” for receiving, moving and storing data, often in the cloud. This platform can then be used by data analysts, who might want to run reports on the data, and data scientists, who might want to run machine learning algorithms to gain insights.

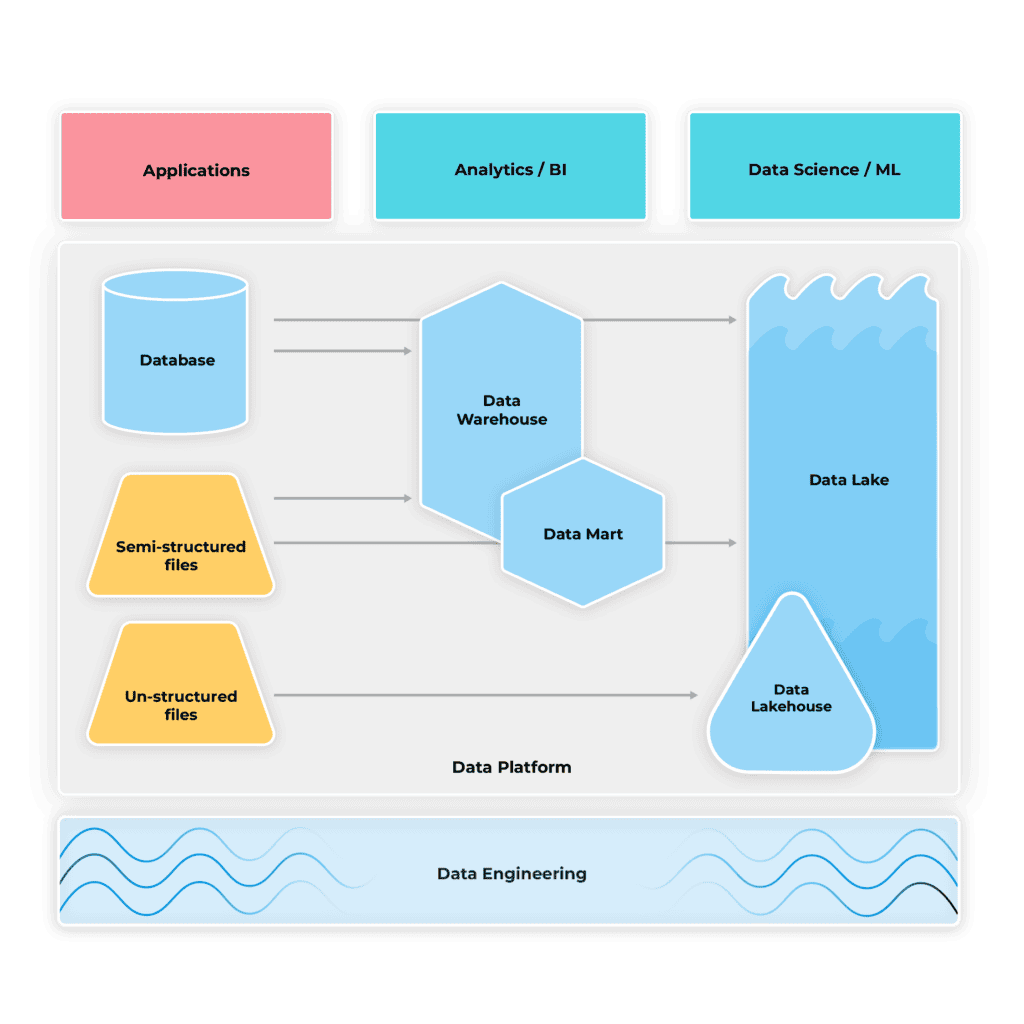

Figure 1: The data engineering pyramid

This interplay of disciplines is nicely represented by a pyramid (see Figure 1), showing that your organisation will struggle to do effective data science if you don’t have a strong data engineering and data analytics foundation. Note that while they enable each other, there is no clear separation between the layers and typically consumers of the data will have concerns that range across the full height of the pyramid.

Data engineering has established itself as a field alongside software engineering in recent years, primarily because the technologies it makes use of have become much more widely available. Whereas in the past you had to buy proprietary software solutions to handle large volumes of data and gain analytical insights, nowadays cloud data products make it easy and affordable (by only charging for actual usage) for any type of business to take advantage of more sophisticated data management solutions.

An overview of current data management solutions

Table 1 provides a quick, handy summary of the terms I will discuss in more detail below. I’ve called them data management solutions to make clear that these are more than just storage options.

| Management Solution | Who is it for? | What? |

|---|---|---|

| Database | (Builders of) Applications | • Optimised for read/write transactions • Relational databases store data using fixed schemas • Non-relational databases store data in semi-structured form, using flexible schemas |

| Data Warehouse | Data Analysts | • Optimised for analytics • A central data hub combining multiple structured and semi-structured sources • Data often modelled using a star schema, with dimension tables describing business entities and fact tables describing business events |

| Data Mart | Data Analysts | • A subset of a data warehouse focused on a particular business domain • Handles joins and aggregations of different data warehouse entities upfront to optimise query performance |

| Data Lake | Data Scientists | • Optimised for machine learning / AI use cases via access to untransformed data • Contain structured, semi-structured and unstructured data (like images) as raw, unprocessed files |

| Data Lakehouse | Data Scientists & Analysts | • Combines data lake & warehouse capabilities into one solution • Uses data lake architecture for object storage, with the addition of a descriptive metadata layer that makes unstructured data queryable in the absence of an ordinary database schema |

Table 1: Overview of data management solutions

A short aside on Data Mesh and Data Fabric

You might have come across the terms “data mesh” and “data fabric” and might have been hoping to see them demystified in this article. I didn’t include them in the table above because they’re not management solutions in themselves, but rather architectural approaches or paradigms that can encompass all the above data technologies. Hence I won’t dwell on them too much, but for completeness and your peace of mind, here is a quick summary of both concepts:

- Data mesh describes an approach where organisations decentralise their data management and let different teams (say finance versus customer support) take charge of their own data end-to-end. Rather than having a central data team that spans the whole organisation, this gives each business unit much more ownership over their data and lets them choose what management option works best for them. The intention of course isn’t to divide teams and not have them talk to each other, quite the opposite! In a data mesh approach, data is regarded like a “product” that each team is responsible for, and which can (and should) be made available to other teams in the organisation via APIs – interfaces – that are managed and maintained by the data owners.

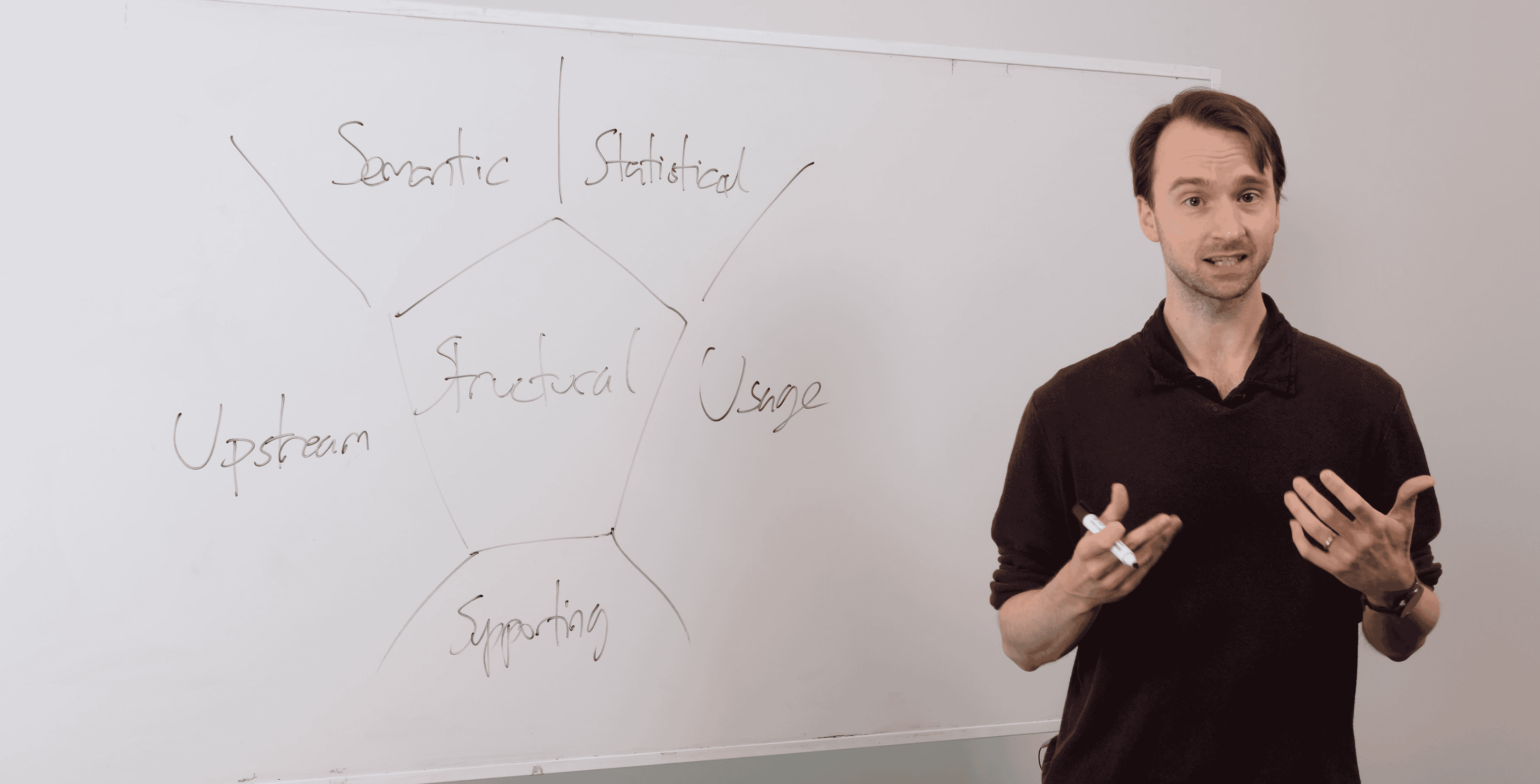

- A data fabric, on the other hand, is a unified, centralised data integration layer which brings together different data storage options, exposing common standards for APIs and reporting solutions. While data mesh decentralises management approaches to give individual teams more ownership, data fabric architectures are useful when there should be a common approach to interact with data across an organisation, especially in areas such as data governance and lineage (which concern themselves with the history and wider context of data).

As both data mesh and data fabric describe conceptual architecture patterns rather than just storage solutions, there aren’t necessarily products that directly map to these terms. Neither are they exclusive of one another. Microsoft Fabric, for example, is a unified platform for data management (so a data fabric) but includes a domain model that enables implementation of the data mesh architecture.

Now we’ve got these two terms out of the way, let’s get back to focusing on the data management options from table 1.

Database – When you just want to read and write data

The term database is probably familiar to most people who’ve ever come across a computer.

Databases support read/write operations that let you retrieve and store data. They’re generally optimised for handling real-time, concurrent transactions: many users can write to the database at the same time, and data can be read back as soon as it’s written.

Most applications you interact with will have a database backing them. Often, a business will have multiple databases focusing on specific business areas. For example, analytics data and user accounts are not necessarily stored in the same database.

Databases can broadly be divided into relational and non-relational databases, and both have their use cases.

Relational databases contain tables whose rows adhere to a specific schema, where each table has pre-defined columns that describe attributes of each element (row) in the table. Different tables will contain different entities (like employees and addresses), which can be related to each other via a “foreign key” column – a reference from one table to another table via a unique identifier. Note that the term “relational” does not actually refer to these inter-table relationships. Instead, a relation is a row of a table, and the rows are relational because of their adherence to the schema. So even in familiar territory, terminology can be misleading!

Non-relational databases, by definition, do not have a fixed schema. Instead, the contents of a non-relational database are semi-structured. This means there is still a format that elements of the same type adhere to, but it’s not fixed. JSON objects or XML files are examples of this – there is a pre-defined way of assigning properties and attributes, but each object doesn’t need to have exactly the same attributes. Non-relational databases are particularly useful for scenarios where your data is flexible and might change in future, for example in applications that contain a lot of user personalisation features.

Note that non-relational databases are also referred to as NoSQL databases. In another example of confusing terminology, this stands for “not only SQL” rather than no SQL, so while they do not prohibit the use of SQL they are generally more flexible than their relational counterparts.

Data Warehouse – When you want to gain analytical insights from your data

What happens if you do have business data not only in databases, but also dotted around in various files, and you want to analyse this?

For example, assume you would like to know how many people in a certain location over a certain time period bought product X. Let’s also assume your customer data is in a CRM, your sales transactions are in a relational database, and you’ve got metadata about your stores in excel files. To make it easier to analyse all this data together, you should consider setting up a data warehouse.

A data warehouse is a central hub that combines large volumes of disparate data sources into a single source-of-truth, with data transformed into a highly structured format that is optimised for analytics. Let’s break this down.

- To combine data sources into a unified, central, highly structured solution, you need to run an ETL (extract, transform, load) process. A data pipeline will ingest (extract) data from your source systems, which can include databases and files, transform it into a new structure and then write (load) it to new location. The data warehouse is thus a layer that sits on top of your original sources.

- How is a data warehouse optimised for analytics? Ordinary transactional database performance suffers when querying large volumes of data filtered across particular business domains. This is because transactional databases avoid duplicating information to achieve fast writes; to get a full picture of the data you need to make a lot of time-intensive joins between tables. Data warehouses, on the other hand, implement data models that are optimised for running large analytics queries. They often follow a star schema, where a central “facts” table contains business events, like the number of sales of a particular product over a particular time period at a particular location. The business entities referred to in this facts table, for example “product”, “accounting period” and “store location”, are stored in their own “dimension” tables. Analysts will typically want to filter the data by these dimensions.

An analogy with a physical warehouse is quite useful here. Like dimensions, rows of shelves usually contain entities of the same type (i.e. you’d expect to find all the sofas and chairs close to each other in the self-service warehouse at IKEA, and not to find flat-pack drawers placed right in between two armchairs). You’d also expect to store a large of volume of quite different, not necessarily related types of objects in a physical warehouse, in the same way that you might have both structured databases and semi-structured files in a data warehouse.

Traditional data warehouses (from vendors such as Oracle and IBM) would require engineers to set up a lot of (expensive) on-premise servers running applications that manage the warehouse for you. Nowadays, there are myriad cloud data warehousing solutions, including Snowflake, BigQuery and Redshift. These make it simple to onboard (via ETL pipelines) and structure your data in a way that is most suitable for running analytics and reporting for your organisation.

Data Mart – When you want to split your analytics into specific business concerns

A data mart is a subset of a data warehouse. It usually focuses on a particular business domain.

Different business departments care about different data sets, and hence don’t need all the data in the warehouse for their reporting. At the same time, a business unit might always care about specific aggregations or joins between different tables (domains). Running these kinds of queries every time before you can analyse the data further can be time-consuming.

A data mart lets you create a highly curated view of a data warehouse for specific consumers of this data. This is achieved by further transforming the source data to be more targeted at a specific business department. Queries can then be run in isolation from other units on only the data you care about, making them faster and more secure.

Data Lake – When you’re interested in using machine learning or AI to get insights

Data lakes have gained increasing popularity in recent years, so you might wonder if you should hop on board too. If all you want from your data is reporting and analysing some well-defined metrics, there is probably no need, but if you want to dabble in machine learning, predictive analytics or generative AI they might just be the thing for you.

A data lake is a centralised file/blob store that contains untransformed data in its raw form, allowing data scientists to train machine learning models on the data.

The name analogy doesn’t work quite as well as for the warehouse, but there is still an intuition from the name that a data lake will be more “fluid” and able to handle different types of objects. Indeed, a data lake can contain structured, semi-structured, as well as truly unstructured data (like pdfs or images), all as raw files.

It’s important for data scientists to access raw data as it provides an unadulterated, unopinionated snapshot of the data at collection time. You don’t risk losing information that might turn out to be useful if you store it exactly as it was received! In practice, for machine learning algorithms to work, the data is typically prepared in an initial step, for example by removing empty values or transforming it into a more useful format for the algorithm (just not as “heavily” as one might transform data in a traditional data warehouse).

To get raw data onto the lake, it is copied directly from the underlying source systems – so effectively an ETL pipeline with no (or minimal) transform step. This decoupling allows the (often CPU intensive) machine learning workflows to run on the lake itself and leave the source system free from additional load. A data lake can also act as the source system for a more structured data warehouse, just as individual source systems and databases can be.

Data lakes are able to handle very large data sets by making use of cheap, highly scalable storage and a separate “compute” engine that runs queries on demand. Hence, they are particularly useful for businesses who have many disparate data sources, including unstructured data, that they would like to run heavy analytics workloads on.

However, a word of warning – as data lakes can ingest all sorts of data without requiring thinking about a schema upfront, there is a risk of them simply becoming a dumping ground for data rather than being used as intended. Just because you can set up a data lake, doesn’t mean you always should!

Data Lakehouse – When you want the best of both worlds

As you might guess from the name, data lakehouses contain features from both data warehouses and data lakes. Specifically, they combine the cheap storage and efficient compute capabilities of a lake with the data management features of a data warehouse: a lakehouse keeps the underlying lake infrastructure but is bolstered with a metadata layer that lets users directly define database schemas, even for unstructured data.

Hence, rather than having a data lake for data science use cases and duplicating the data into a data warehouse sitting on top for BI/analytics use cases, a lakehouse lets you do both in one. Adding a descriptive metadata layer on top of your unstructured data makes it easier to manage updates and deletes, and simplifies running analytics and BI processes, as all the data in the lake can be categorised up-front. Platforms like Databricks have automated processes that can analyse your unstructured data and automatically create a descriptive schema, avoiding a lot of manual setup.

Note that you don’t need to apply structure to all of your lake data – you can keep anything in its raw form if you wish, just like for a data lake.

Data lakehouses could well be the future – presenting a “best of both worlds” for use by data scientists and data analysts, and maybe ultimately replacing the concept of separate lakes and warehouses.

Aside: Convergence between data lakes and cloud data warehouses

Indeed, behind the scenes the technical implementations for data warehouses and data lakes are already converging.

While traditional data warehouses were effectively still normal databases, with data structured to be optimised for analytics, modern cloud data warehouses like Snowflake already use scalable object storage and a separate compute engine to optimise performance under the hood, just as data lakes do.

So while conceptually they are still different, with time we can expect data lakes and data warehouses to blur more and more, and instead allow people to focus on the most important thing: the use cases.

What next?

A picture famously speaks a specific four-digit number of words, so figure 2 summarises the discussion above from the angle of the users of the data.

Figure 2: Relationship between data management solutions and users of the data

Depending on what you want to do with your data, you will sit somewhere on this diagram. Everyone dealing with any kind of application will probably need to worry about databases, but whether you need to set up a data warehouse or a data lake will depend on what your business goals are in the near future.

Obviously, you could say that gaining insights from your data is always a good idea and you wouldn’t be wrong! But you should also weigh up things like running costs and the long-term management and maintenance of solutions you set up for your business. It might be better to start small than follow the hype and start onboarding all your data sources into a data lake architecture, without actually thinking about what you want out of it.

In a way, the underlying data architecture doesn’t matter that much to the end consumer, who will be focused more on use cases than the exact technical solution. At Softwire, we offer clients data engineering kickstarts that set up the foundation for their future data needs. When I describe these in pitches, I like using the term data platform to encompass all the different architecture options, abstracting away the internal implementation from the client and focusing more on what the platform will enable them to do.

While it’s important for the data engineers to understand exactly what they’re building, as the end user, the question you should ask yourself is, “What do I want out of my data?” and let expert data specialists set up the right architecture for you.

Practical tips for data mesh

This handy guide further breaks down the cultural changes required for implementing data mesh into practical steps, showing you how to take advantage of domain and product thinking in any organisation.

Grab your free copy to discover:

Further Reading

This article was written from hands-on experience in the field of data engineering and absorbing many different resources in preparation for writing. While I’ve definitely clicked on many more links to validate my understanding, here is a list of some of the resources I found useful when putting this together:

- J. Reis & M. Housley, Fundamentals of Data Engineering (chapters 3 and 6, overview of data storage options)

- Amazon: Non-relational vs relational databases

- https://www.healthcatalyst.com/insights/database-vs-data-warehouse-a-comparative-review (Data warehouses vs databases)

- https://blog.bismart.com/en/when-choose-data-warehouse-instead-of-database (Data warehouses vs databases)

- https://www.mongodb.com/databases/data-lake-vs-data-warehouse-vs-database (Data lakes vs data warehouses)

- https://blog.purestorage.com/purely-informational/data-mesh-vs-data-fabric-whats-the-difference/ (Data mesh vs data fabric)

- Atlan: Data mesh vs data fabric